Hi Derick,

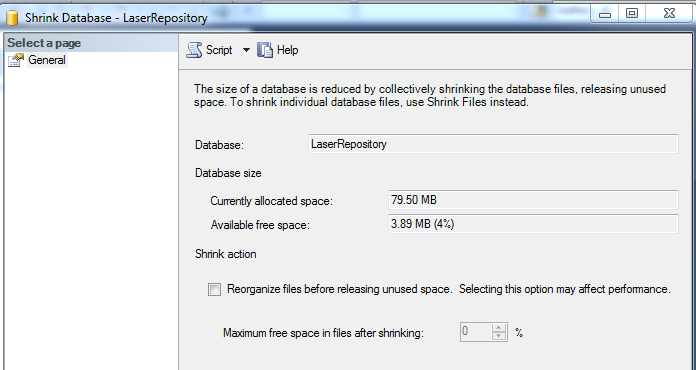

Frist open up SQL Server Management Studio and then righ-click the DB -> Tasks -> Shrink -> Database. You should get a screen like this below. Take note of how much free space is available to be released.

I wouldn't shrink the DB just for the sake of it. If the 19GB usage is fine and there's enough free space on the HDD, I'd recommend you leave it.

SQL automatically expands the MDF file to accomodate its needs for additional data storage. Since the LF Search is actually just "temporary storage" I understand the temptation to free up the used space. However, if you adopt this approach then you will find yourself doing this daily, because Laserfiche will require space to store the search results over and over again.

Typically, the SQL database will grow to the maximum size that was needed at some or other point in time during your system usage. It is better to leave the 19GB as is, since it will be easier for SQL to use the reserved space next time, and performance won't suffer.

Shrinking will also just defragment your database and cause performance issues down the line. Only shrink if there is a very good reason for it, perhaps you had a runaway workflow that was searching in a loop.

You could investigate the cause of the size usage in more detail by running this script, which will tell you exactly where the space is being used up:

SELECT

t.NAME AS TableName,

s.Name AS SchemaName,

p.rows AS RowCounts,

SUM(a.total_pages) * 8 AS TotalSpaceKB,

SUM(a.used_pages) * 8 AS UsedSpaceKB,

(SUM(a.total_pages) - SUM(a.used_pages)) * 8 AS UnusedSpaceKB

FROM

sys.tables t

INNER JOIN

sys.indexes i ON t.OBJECT_ID = i.object_id

INNER JOIN

sys.partitions p ON i.object_id = p.OBJECT_ID AND i.index_id = p.index_id

INNER JOIN

sys.allocation_units a ON p.partition_id = a.container_id

LEFT OUTER JOIN

sys.schemas s ON t.schema_id = s.schema_id

WHERE

t.NAME NOT LIKE 'dt%'

AND t.is_ms_shipped = 0

AND i.OBJECT_ID > 255

GROUP BY

t.Name, s.Name, p.Rows

ORDER BY

t.Name

Cheers

Sheldon