I think I've solved about 95% of this. I believe this is a pretty serious issue for csv remote agent data sources and probably should be fixed.

After spending way too much time reverse engineering how this data source works, I've found a few key things:

1) The name of the data column has nothing to do with the data type the cloud data source chooses

2) The data type appears to be evaluated every time a query is run. I was reluctant to believe this and did most of my testing by first re-verifying the data source. Eventually, I found all I had to do was to just save the file and the data types would work or not work depending upon my data. I find this surprising as refreshing data could potentially change the data type Laserfiche interprets for a column and break an integration.

3) The Lookup seems to scan each column for 13 consecutive data types. If 13 consecutive are found, it uses that data type. If a new data type pops up, it starts over looking for another 13 consecutive types. So, it could find 10 consecutive string values and then 13 consecutive integer values. In this case, the column data type would be set to integer and searches on the string values would be invalid. I'm not completely sure what happens when it doesn't find 13 consecutive values but my best determination is that it uses the first data type found.

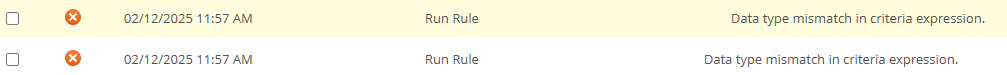

This is pretty convoluted and has to be inefficient. So, I thought maybe once the lookup is published, it fixes the data types and this wouldn't be a problem. So, I tested it. I filled the first 13 rows of my data source with "string" for every row and column. I configured and published the lookup and then built a workflow that runs the lookup rule. My first query ran with no issues. I removed the 13 dummy rows and ran it again:

So, it appears the data type evaluation is run on every column every time a lookup is run. Simple changes in the data source could have severe consequences for integrations that depend upon the csv file.

In my case, the workaround is simple. I'm having to run a PowerShell script to add column headers and change the extension on the csv file every night as a new file is available. To fix this, I'll just fill the first 13 rows with data that forces the correct data type.