Time waits for no man, so we started our own series of experiments. We are running a conversion, and that allows us to control the nature of the activity (importing documents basically) and also the numbers, for a real A-B test. First compression. If you turn compression on, you get a 20:1 reduction in the size of the rollover files. A 10 GB audit trail log will compress down to 500 MB. Its dramatic.

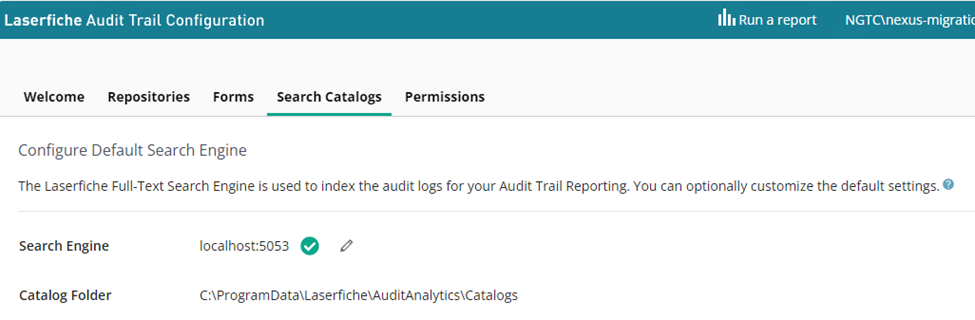

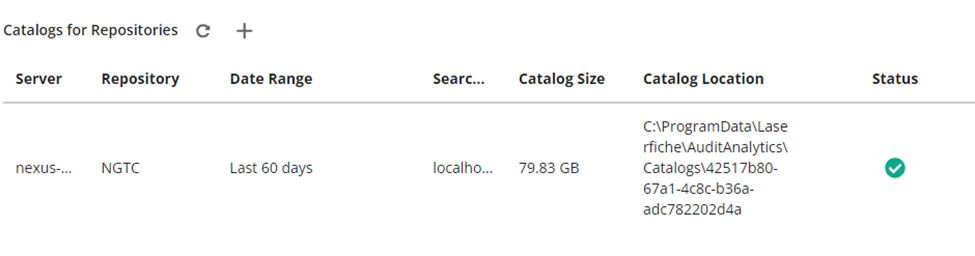

The heart of the Audit Trail system is the search catalog:

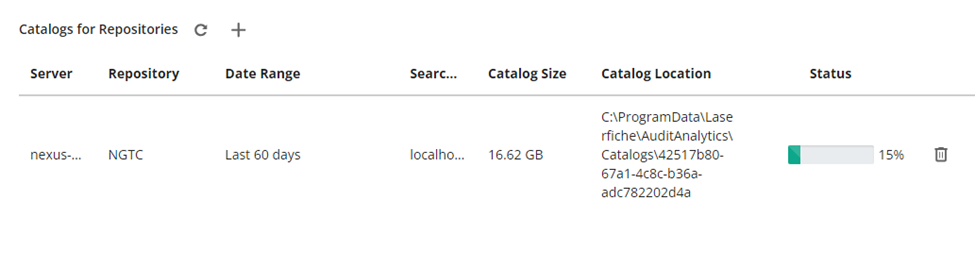

One big difference from the earlier versions (10.4) is that the process to change the amount of live data is different and a little awkward. Basically, you either have to delete and re-create an existing catalog, or create a new one from scratch:

Reports will be offline until this rebuild process is finished.

Recommendation - Do NOT take the default of C:\.

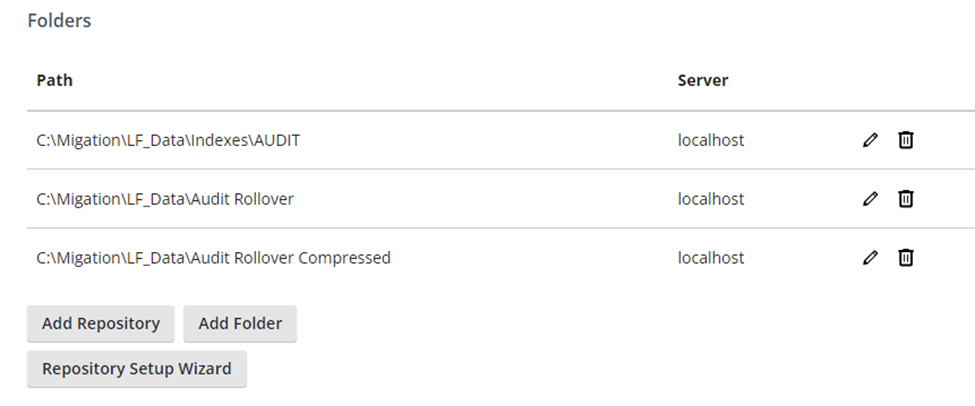

There are circumstances when the files need to be moved to a new location, but where they are still live – that is, managed by the server. To set this up, to go Repositories:

At the bottom of the page, you can add additional locations:

Just hit the Plus sign to add a folder.

Warning! The search catalog can be quite large. This one was 80 GB for 60 days of log files that themselves were only 5 GB, a relatively small amount of data. (A mix of uncompresses and compressed). It is probably a good idea to direct it to a drive with a lot of free space:

And the C drive is certainly not what you want to use.

The takeaway is that the Catalog indexes the heck out of the data, giving up disk space in exchange for performance. Since disk space is inexpensive and your/clients time is not, this is a good trade off. Just be aware that this is how the set up works in V11.

The last question is how this impacts the reporting performance. We are still testing, but it appears that there are just small differences between compressed and uncompressed files (once the catalog is built) and there are only small differences between reports that capture a lot of events and reports that capture far fewer. If the data is largely pre-staged in the search catalog, this makes a lot of sense.